Introduction

A decade ago, Amazon released AWS. Since then, the adoption of public clouds, like GCE, Rackspace, Joyent, CiscoCloud and many others, along with tools such as CloudStack and OpenShift for creation of private clouds, has been nothing short of outstanding. Two problems, however, remain.

- We don’t know how to build the right software for the cloud. Often developers simply copy their monolith to the cloud.

- Clouds are not ‘approachable’ or easy to use for software developers. We are only now learning how to take full advantage of them.

Our first attempts, as an industry, were to create tools like Puppet and Chef, which ‘tamed’ clouds but were effectively replicating the actions of a human operator. But this change was led by Ops departments that slowly became responsible for the growing part of the application logic related to the infrastructure. Now, with growing popularity of containers and changes in how we organise systems as microservices, we are able to think about the creation of Cloud Native Applications. Applications that can take full advantage of a modern cloud and allow Development to move the infrastructure logic into the normal development process.

Recent History

Cloud Native Applications are the result of a recent history with three key aspects:

- Docker and software containers.

- Microservices.

- The DevOps movement.

Docker and Software Containers

Over the last two years, Docker has taken the software development world by storm. Docker enables the creation of containers with a fully functional OS plus any application and all its dependencies in milliseconds. Even better, it is intuitive to developers and strongly resembles the well-defined development cycle used in most programming languages: sources in version control used to build binaries that are pushed into artifact repositories that support dependency management.

This change, as well as helping to create a vibrant community around containers, led to most of the big software vendors and service providers such as IBM, Amazon, RedHat, Microsoft, Cisco getting behind container technologies.

This huge shift is very similar to what happened around VMs. VMs led to the creation of modern clouds and operations automation tools like CFEngine, Puppet, Chef and Ansible. The shift to VMs reduced the speed of deployment of a single machine from days to minutes. This change allowed the move from monolithic systems running on a few physical machines to distributed systems running on dozens or even hundreds of VMs.

In turn, containers, reduced the time of deployment to seconds and allowed us to take the next step towards small, interrelated but independent services that are commonly called microservices.

Containers are essential for effectively building microservices as they make it possible to run a very small application with all the dependencies without the burden of additional running OS. As a case in point, Netflix who are among the pioneers of microservices recently switched to containers too.

Microservices

As with containers, microservices were not born in the last two years but have existed for quite a long time. The microservice architectures ask us to split systems into smaller pieces, which is especially useful when the complexity grows beyond the ability of a single team to develop and maintain the service effectively.

The reason we see the growth of microservices is due to our ability to deploy them quickly and efficiently using containers and other complimentary technologies. Containers and microservices can exist without each other, but together they create an extremely powerful combination that can significantly reduce the time it takes to deliver value to customers.

Many articles have been written that suggest that microservices are “not a free lunch”, and indeed they do create new challenges. In the world of microservices, we are working very hard to split the functionality into small and independent services, each running multiple instances, potentially on variety of platforms. They are all potentially built in a different way and have independent release timelines. All this may lead to a deployment and maintenance nightmare.

Dunbar’s Number - A Small Digression

“Cattle and pets” is an excellent metaphor and the reason for it’s existence can be explained by Dunbar’s number. Dunbar suggested a number, roughly equal to 150, as the cognitive limit to the number of people with whom one can maintain stable social relationships.

My hypothesis is that the same number can be used to measure the limit for the number of the servers that can be named and given personal care. Once we lose the ability to remember our servers by name, we need to create a new, higher level entity and start giving names to the instances of this new thing.

Microservices, which are often implemented as thousands of (sometimes) short-lived containers have pushed us over Dunbar’s number. This means that we need to start dealing with them as if they were ‘cattle’ that can be easily and automatically replaced and not like ‘pets’ - that we love and give them personal names.

What will be our next pets? Possibly microservices. We can give them names and for the foreseeable future treat them with the care. However, when the day comes that each developer will need to know over 150 microservices by name, they will be destined to become cattle, too.

DevOps

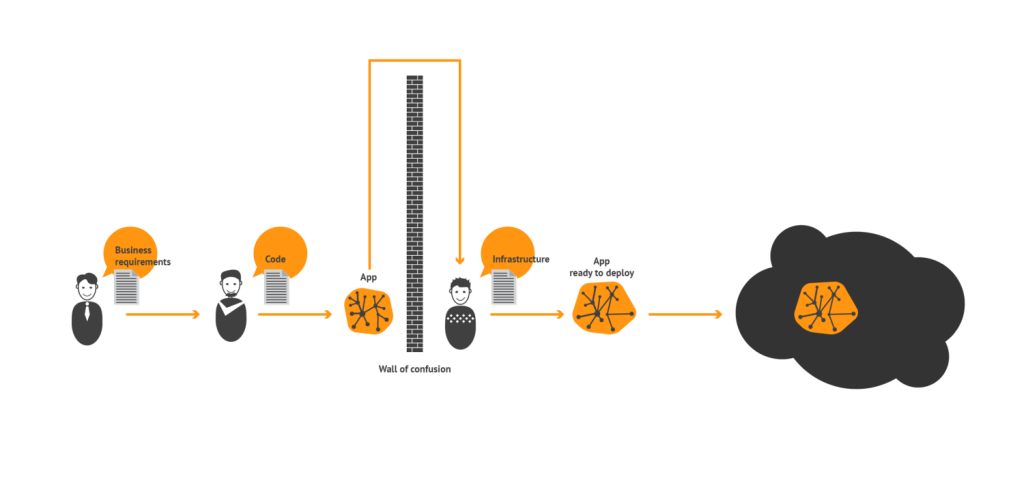

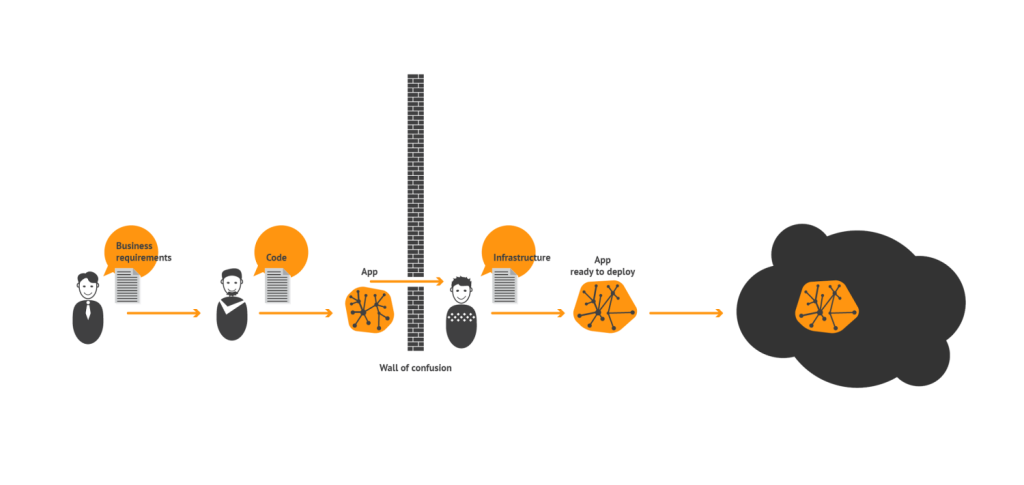

In his seminal article defining DevOps, Damon Edwards explained the principle of the Wall of Confusion between Dev and Ops. The wall prevents the steady flow of finished features towards users, since the software needs to be repackaged and reconfigured by different people using different tools.

The solution proposed by the DevOps movement is to create a new culture of a team that includes both Dev and Ops with shared language, tools and processes.

It is difficult to disagree with the benefits brought to the software delivery world by DevOps. However, those benefits are not sufficient these days.

Infrastructure operations such as scaling parts of the application based on load, monitoring failures, and migrating services between datacenters are not separable from the core functionality of the application.

Therefore DevOps is just opening a window in the wall of confusion instead of removing it entirely.

Cloud Native Applications

There are a number of pressures to create smaller services. Partly, it’s organisational: companies find it easier to break themselves into small ‘two pizza’ teams rather than managing a creative process within a hierarchy. It is also partially necessary: as the IoT and the data it produces grows, so too must the systems that manage the IoT and processes the data.

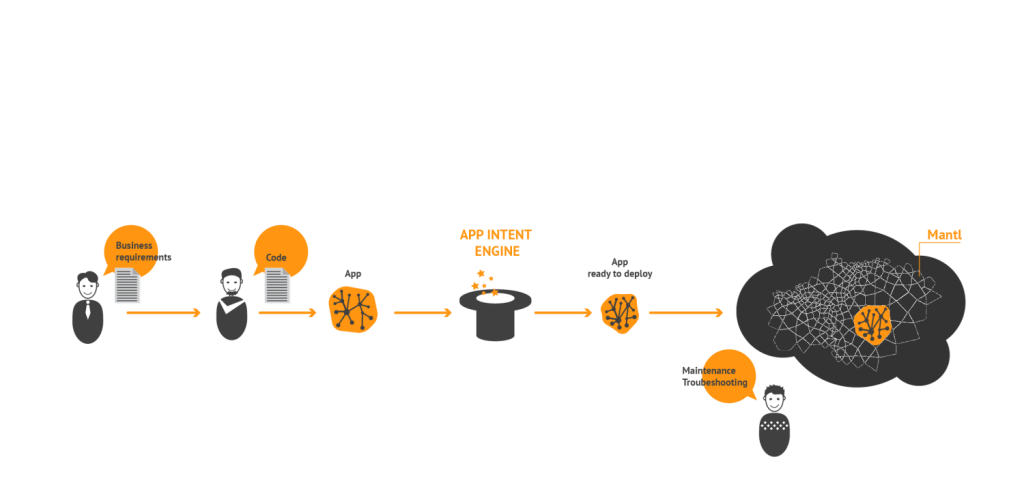

Mantl, Shipped and other projects led by Cisco, are built to enable fully automatic creation and deployment of cloud-native applications. Instead of just allowing VMs to be booted up in a datacenter managed by somebody else, Mantl and Shipped allow complex interactions and management ‘out the box’.

Once upon a time, software development was broken up into development, testing and deployment teams. Those teams were created to address a certain challenge, such as testing. Once the challenge was overcome, communicating to the other teams became the biggest issue and thus, years later, those teams were incorporated back into the normal development teams. The communication problems vanished.

The promise of cloud native applications is to create a post-DevOps state where teams can be truly independent. To achieve this we need to make the cloud invisible and provide simple interfaces that allow developers to interact with the cloud and surrounding services.

Previous article

Previous article