We seem to say this a lot at Container Solutions, but the combination of microservice architectures and the practice of DevOps have smashed apart the assumptions made by traditional tools. None more so than in the monitoring sector, which we’ve talked about in the semantic monitoring and monitoring performance blog posts.

Traditional monitoring solutions like Nagios and New Relic (to give them credit, they do appear to be progressing) initially made two key assumptions: applications are monolithic and their users are operations staff. Both assumptions have been demolished, which left a gaping hole in the marketplace for microservice-come-devops monitoring solutions. Since we’re also keen on open-source solutions there are only a few options. Some notable solutions are Graphite, InfluxDb and the focus of this article, Prometheus.

Prometheus has been accepted by the Cloud Native Computing Foundation as a hosted, incubating project, which helps to justify the need of such a technology and verify that Prometheus is a promising solution to that problem.

The problem, when dressed down to its bare necessities - sorry, my daughter has just watched the Jungle Book for the tenth time - is that developers are assuming long term responsibility of the code that they write. They require monitoring on a level that is completely different to traditional operational tasks. In order to ensure that their code is working correctly/optimally, they require monitoring to be an integral part of the code. In fact, I’m going to go as far to suggest that monitoring is the most important part of the code, because only these metrics are capable of truly verifying that requirements have been met.

Hence, we, as developers, require a code-centric open-source monitoring solution that is scalable and is capable of: accepting metrics, storing metrics, alerting and providing some way to visualise and inspect the metrics for ongoing optimisation and analysis. For our most recent projects we’ve chosen Prometheus and this post will show you how to integrate it into Java and Go based microservices.

Introducing Prometheus

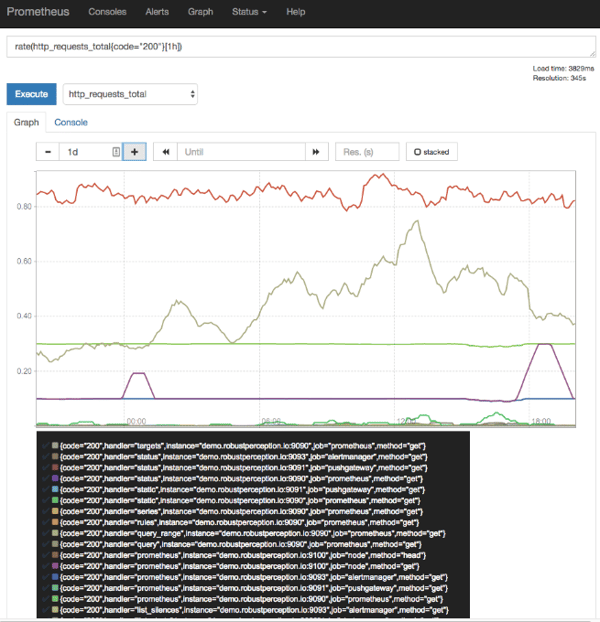

Prometheus is a simple, effective open-source monitoring system. It is in full-time use in the projects being developed in collaboration with our good friends over at Weaveworks. It has become particularly successful because of its intuitive simplicity. It doesn’t try to do anything fancy. It provides a data store, data scrapers, an alerting mechanism and a very simple user interface.

There is a significant amount of online discussion about Prometheus’ data store. To cut a (very) long story short, the data is stored as key value pairs in memory cached files. Therefore, if you have a significant amount of metrics/services, then you need to be careful about storage requirements. A single instance can manage a large number of services/metrics. Suggestions seem to be around one million scrapes per ten seconds - 100,000 / s (reference). After this point the initial recommendation is to split instances per function (frontend/backend/etc.). After that, sharding is possible, but is manually configured. This is potentially one area that is harmed by the simplicity, although it is unlikely to be a serious issue for all but the most demanding users.

The data scrapers, as the name suggests, use a pull model. I.e. Prometheus has to pull metrics from individual machines and services. This does mean that you have to plan to provide metrics endpoints in your custom services (there are automatic scrapers for a range of common out-of-the-box technologies), but this isn’t as bad as it sounds (I’ll show you how to add metrics to Java and Go based services later). Some might argue that this is rather antiquated, but I like it, because it is obvious. And when things are obvious, you don’t need to spend man-hours (man-weeks for some monitoring systems) training users and developers. When things are obvious, it’s much harder to make mistakes.

The only thing to be careful about is the naming of your metrics. It’s really easy to let the numbers of metrics get out of hand and it does help to have some central governance over the naming of them. For more information see the Prometheus documentation.

Prometheus’ use of alerting tools is also very simple. It is possible to send alerts to a number of services: Email, Generic Webhooks, PagerDuty, HipChat, Slack, Pushover, Flowdock. All of the setup is performed via configuration and the altering rules are scriptable.

Finally, whilst the UI isn’t going to win any design awards, it is functional and great for debugging during development. For full-time dashboards, consider one of the many dashboarding tools that support Prometheus such as PromDash or Grafana. This assertive decision not to include a fully-fledged dashboard is another great decision. It means that Prometheus can concentrate on what it does best, monitoring.

Adding Prometheus Metrics to Go Applications

More often than not when developing microservices in Go, we make use of the Go Kit toolkit. Go Kit makes it incredibly easy to add Prometheus monitoring, which is supported out-of-the-box.

Prometheus monitoring comes from the Metrics package, in the form of a decorator pattern, which is the same pattern used for Go Kit’s logging. The middleware function decorates a service by implementing the same interface and intercepting the call from an endpoint. For example, if you imagine you provided a Count method on your service, then the interface would look like:

type Service interface {

Count() (int64, error)

}

Then to make the metrics middleware, we would pass in Go Kit’s implementation of Prometheus’ counter and histogram with:

type instrumentingMiddleware struct {

requestCount metrics.Counter

requestLatency metrics.TimeHistogram

countResult metrics.Histogram

next Service

}

Finally, we would decorate the service with a function that provides a count of the number of hits and an estimate of the latency of the service:

func (mw instrumentingMiddleware) Count() (int64, error) {

defer func(begin time.Time) {

methodField := metrics.Field{Key: "method", Value: "count"}

errorField := metrics.Field{Key: "error", Value: fmt.Sprintf("%v", err)}

mw.requestCount.With(methodField).With(errorField).Add(1)

mw.requestLatency.With(methodField).With(errorField).Observe(time.Since(begin))

mw.countResult.Observe(int64(n))

}(time.Now())

return mw.next.Count()

}

In the method that wires the service and middleware together, you would then simply create new instances of the metrics objects and add a rest endpoints called /metrics that returns the handlerstdprometheus.Handler(). That’s it!

For complete examples, see the Go Kit documentation or any of the Go-based services in the Microservices-Demo repositories.

Adding Prometheus Metrics to Java Applications

Prometheus provides libraries for Java applications which can be used directly, but we often use Spring and Spring Boot, and it would be a shame to not take advantage of Spring Boot Actuator. Hence, with a huge chunk of help from Johan Zietsman’s blog post, it is reasonably easy to get a lot of monitoring for very little code.

First you need to include the actuator and prometheus dependencies in your maven/gradle file.

org.springframework.boot

spring-boot-starter-actuator

io.prometheus

simpleclient

RELEASE

io.prometheus

simpleclient_common

RELEASE

Next, we need to provide code to convert Actuator’s metrics into a format that Prometheus can understand. Counters and gauges are stored in maps, and the name of the actuator metric is sanitised into one that is Prometheus-friendly.

import io.prometheus.client.CollectorRegistry;

import io.prometheus.client.Counter;

import io.prometheus.client.Gauge;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.actuate.metrics.Metric;

import org.springframework.boot.actuate.metrics.writer.Delta;

import org.springframework.boot.actuate.metrics.writer.MetricWriter;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ConcurrentMap;

public class PrometheusMetricWriter implements MetricWriter {

private final ConcurrentMap<String, Counter> counters = new ConcurrentHashMap<>();

private final ConcurrentMap<String, Gauge> gauges = new ConcurrentHashMap<>();

private CollectorRegistry registry;

@Autowired

public PrometheusMetricWriter(CollectorRegistry registry) {

this.registry = registry;

}

@Override

public void increment(Delta<?> delta) {

counter(delta.getName()).inc(delta.getValue().doubleValue());

}

@Override

public void reset(String metricName) {

counter(metricName).clear();

}

@Override

public void set(Metric<?> value) {

gauge(value.getName()).set(value.getValue().doubleValue());

}

private Counter counter(String name) {

String key = sanitizeName(name);

return counters.computeIfAbsent(key, k -> Counter.build().name(k).help(k).register(registry));

}

private Gauge gauge(String name) {

String key = sanitizeName(name);

return gauges.computeIfAbsent(key, k -> Gauge.build().name(k).help(k).register(registry));

}

private String sanitizeName(String name) {

return name.replaceAll("[^a-zA-Z0-9_]", "_");

}

}

Then we need to provide endpoints for the metrics. This first file writes the map into the admittedly crazy Prometheus text format.

import io.prometheus.client.CollectorRegistry;

import io.prometheus.client.exporter.common.TextFormat;

import org.springframework.boot.actuate.endpoint.AbstractEndpoint;

import java.io.IOException;

import java.io.StringWriter;

import java.io.Writer;

public class PrometheusEndpoint extends AbstractEndpoint {

private CollectorRegistry registry;

public PrometheusEndpoint(CollectorRegistry registry) {

super("prometheus", false, true);

this.registry = registry;

}

@Override

public String invoke() {

Writer writer = new StringWriter();

try {

TextFormat.write004(writer, registry.metricFamilySamples());

} catch (IOException e) {

e.printStackTrace();

}

return writer.toString();

}

}

And then an Spring MVC endpoint is created to host the data.

import io.prometheus.client.exporter.common.TextFormat;

import org.springframework.boot.actuate.endpoint.mvc.AbstractEndpointMvcAdapter;

import org.springframework.boot.actuate.endpoint.mvc.HypermediaDisabled;

import org.springframework.http.HttpStatus;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.ResponseBody;

import java.util.Collections;

public class PrometheusMvcEndpoint extends AbstractEndpointMvcAdapter {

public PrometheusMvcEndpoint(PrometheusEndpoint delegate) {

super(delegate);

}

@RequestMapping(method = RequestMethod.GET, produces = TextFormat.CONTENT_TYPE_004)

@ResponseBody

@HypermediaDisabled

protected Object invoke() {

if (!getDelegate().isEnabled()) {

return new ResponseEntity<>(

Collections.singletonMap("message", "This endpoint is disabled"),

HttpStatus.NOT_FOUND);

}

return super.invoke();

}

}

And finally some configuration to wire it all together. You will notice that the endpoint is created on /prometheus and not the standard/metrics. This is because Actuator is still running, and exposes itself on /metrics. You aren’t able to override this functionality (easily) so it is simpler to use a different endpoint and change the Prometheus configuration.

import io.prometheus.client.CollectorRegistry;

import org.springframework.boot.actuate.autoconfigure.ExportMetricWriter;

import org.springframework.boot.actuate.autoconfigure.ManagementContextConfiguration;

import org.springframework.boot.actuate.condition.ConditionalOnEnabledEndpoint;

import org.springframework.boot.actuate.metrics.writer.MetricWriter;

import org.springframework.boot.autoconfigure.condition.ConditionalOnBean;

import org.springframework.context.annotation.Bean;

import works.weave.socks.cart.controllers.PrometheusEndpoint;

import works.weave.socks.cart.controllers.PrometheusMvcEndpoint;

import works.weave.socks.cart.monitoring.PrometheusMetricWriter;

@ManagementContextConfiguration

public class PrometheusEndpointContextConfiguration {

@Bean

public works.weave.socks.cart.controllers.PrometheusEndpoint prometheusEndpoint(CollectorRegistry registry) {

return new PrometheusEndpoint(registry);

}

@Bean

@ConditionalOnBean(PrometheusEndpoint.class)

@ConditionalOnEnabledEndpoint("prometheus")

PrometheusMvcEndpoint prometheusMvcEndpoint(PrometheusEndpoint prometheusEndpoint) {

return new PrometheusMvcEndpoint(prometheusEndpoint);

}

@Bean

CollectorRegistry collectorRegistry() {

return new CollectorRegistry();

}

@Bean

@ExportMetricWriter

MetricWriter prometheusMetricWriter(CollectorRegistry registry) {

return new PrometheusMetricWriter(registry);

}

}

That’s it! Admittedly, this isn’t perfect. With this configuration it isn’t possible to see individual service endpoints (because you haven’t coded them), but it does provide a lot of functionality for very little code. In the future we intend to make this automatic by providing an artefact that you can @Enable.

To see an example of this in action, visit any of the Java-based services in the Microservices-Demo repositories. For example the carts repository.

Previous article

Previous article