In this post we're going to take a quick look at how you can mount the Docker sock inside a container in order to create "sibling" containers. One of my colleagues calls this DooD (Docker-outside-of-Docker) to differentiate from DinD (Docker-in-Docker), where a complete and isolated version of Docker is installed inside a container. DooD is simpler than DinD (in terms of configuration at least) and notably allows you to reuse the Docker images and cache on the host. By contrast, you may prefer to use DinD if you want to keep your images hidden and isolated from the host.

To explain how DooD works, we'll take a look at using DooD with a Jenkins container so that we can create and test containers in Jenkins tasks. We want to create these container with the Jenkins user, which makes things a little more tricky than using the root user. This is very similar to the technique described by Pini Reznik in Continuous Delivery with Docker on Mesos In Less than a Minute, but we're going to use sudo to avoid the issues Pini faced with adding the user to the Docker group.

We'll be using the official Jenkins image as a base, which makes everything pretty straightforward.

Create a new Dockerfile with the following contents:

FROM jenkins:1.596

USER root

RUN apt-get update \

&& apt-get install -y sudo \

&& rm -rf /var/lib/apt/lists/*

RUN echo "jenkins ALL=NOPASSWD: ALL" >> /etc/sudoers

USER jenkins

COPY plugins.txt /usr/share/jenkins/plugins.txt

RUN /usr/local/bin/plugins.sh /usr/share/jenkins/plugins.txt

We need to give the jenkins user sudo privileges in order to be able to run Docker commands inside the container. Alternatively we could have added the jenkins user to the Docker group, which avoids the need to prefix all Docker commands with 'sudo', but is non-portable due to the changing gid of the group (as discussed in Pini's article).

The last two lines process any plug-ins defined in a plugins.txt file. Omit the lines if you don't want any plug-ins, but I would recommend at least the following:

cat plugins.txt

(out) scm-api:latest

(out) git-client:latest

(out) git:latest

(out) greenballs:latest

If you don't want to install any plug-ins, either create an empty file or remove the relevant lines from the Dockerfile. None of the plug-ins are required for the purposes of this blog.

Now build and run the container, mapping in the Docker socket and binary.

docker build -t myjenk .

(out) ...

(out) Successfully built 471fc0d22bff

(out) $ docker run -d -v /var/run/docker.sock:/var/run/docker.sock \

(out) -v $(which docker):/usr/bin/docker -p 8080:8080 myjenk

You should now have a running Jenkins container, accessible at http://localhost:8080 that is capable of running Docker commands. We can quickly test this out with the following steps:

- Open the Jenkins home page in a browser and click the "create new jobs" link.

- Enter the item name (e.g. "docker-test"), select "Freestyle project" and click OK.

- On the configuration page, click "Add build step" then "Execute shell".

- In the command box enter "sudo docker run hello-world"

- Click "Save".

- Click "Build Now".

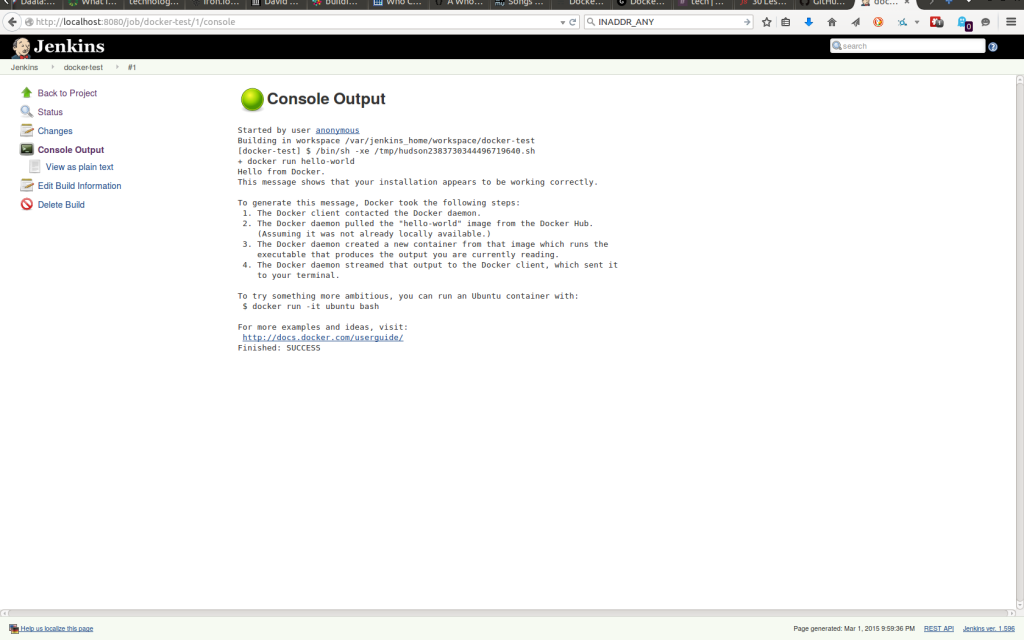

With any luck, you should now have a green (or blue) ball. If you click on the ball and select "Console Output", you should see something similar to the following:

Great! We can now successfully run Docker commands in our Jenkins container. Be aware that there is a significant security issue, in that the Jenkins user effectively has root access to the host; for example Jenkins can create containers that mount arbitrary directories on the host. For this reason, it is worth making sure that the container is only accessible internally to trusted users and considering using a VM to isolate Jenkins from the rest of the host.

There are other options, principally Docker in Docker (DinD) and using HTTPS to talk to the Docker daemon. DinD isn't really more secure due to the need to use a privileged mode container, but does avoid the need for sudo. The main disadvantage of DinD is that you don't get to reuse the image cache from the host (although this may be useful if you want a clean environment for your test containers that is isolated from the host). Exposing the socket via HTTPS doesn't require sudo and keeps using the host's image, but is arguably the least secure due to the increased attack surface from opening a port.

I plan to take a more in-depth look at securely setting up Docker on an HTTPS socket in a later blog.

Previous article

Previous article