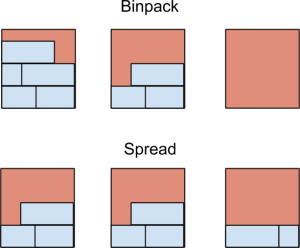

Docker Swarm - Docker's native clustering solution - ships with two main scheduling strategies, spread and binpack. The spread strategy will attempt to spread containers evenly across hosts, whereas the binpack strategy will place containers on the most-loaded host that still has enough resources to run the given containers. The advantage of spread is that should a host go down, the number of affected containers is minimized. The advantages of binpack are that it drives resource usage up and can help ensure there is spare capacity for running containers that require significant resources.

In the long-run, I believe we will see a move towards binpack style scheduling in order to reduce hosting costs. The risk of service downtime can be mitigated by using stateless microservices where possible - such services can be automatically and quickly migrated to new hosts with minimal disruption to availability.

However, if you use binpack today, you need to be aware of how to deal with co-scheduling of containers - how to ensure two or more containers are always run on the same host.

To explain fully, consider the scenario, where we have two hosts with spare capacity, one is nearly full and the other is empty. We can easily mock this situation by provisioning a couple of VMs with Docker Machine:

docker run swarm create

(out) 7a6441b8b92448475fb3f8e166ed2170

docker-machine create -d virtualbox --swarm --swarm-master --swarm-strategy binpack --swarm-discovery token://7a6441b8b92448475fb3f8e166ed2170 swarm-1

(out) ...

docker-machine create -d virtualbox --swarm --swarm-strategy binpack --swarm-discovery token://7a6441b8b92448475fb3f8e166ed2170 swarm-2

(out) ...

eval $(docker-machine env --swarm swarm-1)

docker info

(out) Containers: 3

(out) Images: 8

(out) Role: primary

(out) Strategy: binpack

(out) Filters: health, port, dependency, affinity, constraint

(out) Nodes: 2

(out) swarm-1: 192.168.99.103:2376

(out) └ Containers: 2

(out) └ Reserved CPUs: 0 / 1

(out) └ Reserved Memory: 0 B / 1.021 GiB

(out) └ Labels: executiondriver=native-0.2, kernelversion=4.1.12-boot2docker, operatingsystem=Boot2Docker 1.9.0 (TCL 6.4); master : 16e4a2a - Tue Nov 3 19:49:22 UTC 2015, provider=virtualbox, storagedriver=aufs

(out) swarm-2: 192.168.99.104:2376

(out) └ Containers: 1

(out) └ Reserved CPUs: 0 / 1

(out) └ Reserved Memory: 0 B / 1.021 GiB

(out) └ Labels: executiondriver=native-0.2, kernelversion=4.1.12-boot2docker, operatingsystem=Boot2Docker 1.9.0 (TCL 6.4); master : 16e4a2a - Tue Nov 3 19:49:22 UTC 2015, provider=virtualbox, storagedriver=aufs

(out) CPUs: 2

(out) Total Memory: 2.043 GiB

(out) Name: 4da00a74a9b1

And bring up a container using up half the memory on a host (note that when using the binpack strategy, you must always specify resource usage):

docker run -d --name web -m 512MB nginx

(out) 55c099f324e7a95aff47539231fdad3431077ee80200d418c49c10f30d5c8d52

docker info

(out) Containers: 4

(out) Images: 8

(out) Role: primary

(out) Strategy: binpack

(out) Filters: health, port, dependency, affinity, constraint

(out) Nodes: 2

(out) swarm-1: 192.168.99.103:2376

(out) └ Containers: 3

(out) └ Reserved CPUs: 0 / 1

(out) └ Reserved Memory: 512 MiB / 1.021 GiB

(out) └ Labels: executiondriver=native-0.2, kernelversion=4.1.12-boot2docker, operatingsystem=Boot2Docker 1.9.0 (TCL 6.4); master : 16e4a2a - Tue Nov 3 19:49:22 UTC 2015, provider=virtualbox, storagedriver=aufs

(out) swarm-2: 192.168.99.104:2376

(out) └ Containers: 1

(out) └ Reserved CPUs: 0 / 1

(out) └ Reserved Memory: 0 B / 1.021 GiB

(out) └ Labels: executiondriver=native-0.2, kernelversion=4.1.12-boot2docker, operatingsystem=Boot2Docker 1.9.0 (TCL 6.4); master : 16e4a2a - Tue Nov 3 19:49:22 UTC 2015, provider=virtualbox, storagedriver=aufs

(out) CPUs: 2

(out) Total Memory: 2.043 GiB

(out) Name: 4da00a74a9b1

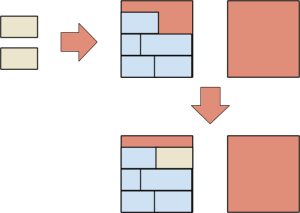

Now suppose we want to start two containers, a web application and its associated redis cache. We want the cache to run on the same host as application, so we need to use an affinity constraint when starting the application container (using --link or --volumes-from would also result in the same constraint). We can try to do this with the following code:

docker run -d --name cache -m 512MB redis

(out) abec45a34912397e1f335714c856d47472855e61d0a4787be70f1088cfd30a64

docker run -d --name app -m 512MB -e affinity:container==cache nginx

(out) Error response from daemon: no resources available to schedule container

What went wrong? Swarm scheduled the first container on the already partly full host as per the binpack strategy. When it came time to schedule the application container, there was no room left on the host with cache, so Swarm was forced to give up and return an error.

Fixing this isn't entirely trivial. We can't remove the cache and schedule it again, as Swarm will use the same host again and we end up with the same problem. We could start another cache container and then the application container, then remove the original cache, but this feels a bit clunky and wasteful (there may also be issues with the first container claiming names or registering with other services). Another fix is to start the first container with a constraint which forces it to be started on the host with sufficient free resources for both containers e.g:

docker run -d --name cache -m 512MB -e constraint:node==swarm-2 redis

(out) 95b66cb5c7a01b641a33d6cd20e32b33659e218fb9d6ba2ff352048901af2b57

docker run -d --name app -m 512MB -e affinity:container==cache nginx

(out) d67a815a064f2e69492ab882f5c3b04dc788b756cb3a7753a26cb94c00417b77

Success, but this still isn't entirely satisfactory. We are now manually managing hosts, which is exactly what we wanted Swarm to do for us. Also, there could be an issue where another user or system schedules containers at the same time as us and fills up the second host before we get a chance to start the second container. Another possibility is to sidestep the issue by creating a single large container which contains both the application and cache. This may be best current solution, but feels unidiomatic and requires extra work.

It's not entirely clear how often users will need to co-schedule containers like this - in some ways it can be considered an anti-pattern due to the tight-coupling of services. However, assuming co-scheduling is sometimes desirable and useful, what we really want is a native way to tell Swarm to schedule two containers at the same time, as a single lump. This is the idea behind "pods" in Kubernetes, and implementing a similar idea in Docker has been discussed at some length on github issue 8781, but it currently doesn't appear to have much support from core Docker engineers. Given this is the case, what other potential solutions are possible going forward? A few things I can think of (these are all off the top of my head and may be fundamentally flawed):

- A "holding" state, similar to Docker create, where containers are defined but not scheduled on a host. Both containers can then be created and started at the same time. At the moment, Docker create can't be used for this, as Swarm allocates resources during create, not run. Something like:

docker hold -d --name cache -m 512MB redis

docker hold -d --name app -m 512MB -e affinity:container==cache nginx

docker unhold redis cache

(out) ...

- A "forward" affinity where a container isn't scheduled until its dependency is started. Something like:

docker run -d --name cache -m 512MB -e forward-affinity:container==nginx redis

(out) Delaying container start until nginx container is scheduled

docker run -d --name app -m 512MB -e affinity:container==cache nginx

(out) 95b66cb5c7a01b641a33d6cd20e32b33659e218fb9d6ba2ff352048901af2b57

(out) d67a815a064f2e69492ab882f5c3b04dc788b756cb3a7753a26cb94c00417b77

- Swarm could automatically move the first container to the host with space for both containers when the second container with the affinity is started. This would require the system to handle the container being stopped and migrated. Also note that Swarm does not currently support node rebalancing, but this is being actively worked on.

Some of these suggestions (holding states and forward affinities) really seem like a half-way house to pods. I have been unable to find much information on what direction Docker are heading in, so it will be interesting to see how things develop. In the meantime, be aware of this issue and consider your options if you want to use Swarm and the binpack scheduling strategy.

Previous article

Previous article