The Docker Universal Control Plane (UCP) is the commercial management solution for containerised applications inside a public cloud or on-premise. It is built on top of Docker Swarm. All UCP components are also running as containers.

This post will guide you through the installation of UCP and the integration of Interlock to dynamically configure HAProxy as a load balancer.

Installation

Docker provides an ucp tool to install the required components. The ucp tool runs via Docker and launches the appropriate containers. It comes with subcommands such as “install”, “join” or “uninstall”. For an overview of all possible commands, use:

docker run --rm -it docker/ucp --help

There is an interactive installation method which guides you through the process, launch it like this.

docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock --name ucp docker/ucp install -i --host-address 172.17.9.102

As we use vagrant for this setup `--host-address` is used to supply the installer with an accessible host address. In this case the static assigned IP address of the bridged interface (172.17.9.102). To be able to manage your host containers from within a container you need to mount the Docker socket into the container.

The command will pull several images and will prompt for values it needs to complete the installation. When it completes, the command prompts you to login into the UCP GUI.

INFO[0015] Login as "admin"/(your admin password) to UCP at https://172.17.9.102:443

To add a controller replica for high availability, run the UCP tool on another server with the “--replica” flag. To achieve high availability you need at least 2 replicas.

docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock --name ucp docker/ucp join --replica -i --host-address 172.17.9.103

Now add an Engine node to the UCP cluster:

docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock --name ucp docker/ucp join -i --host-address 172.17.9.104

The UCP cluster is now up and running, let’s see what we can do with it.

Running

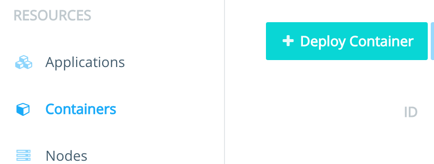

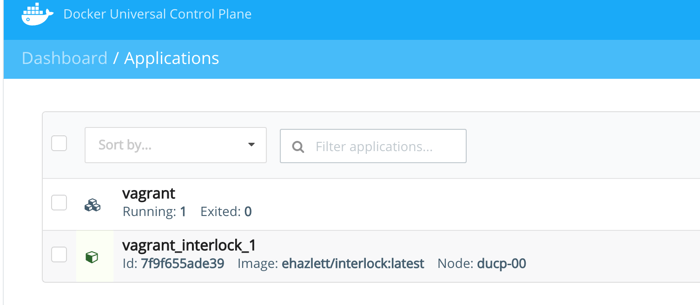

We’ll deploy a container via the GUI therefore navigate to Containers and Deploy Container

Fill out the form to start the container with the desired values

Press “Run Container” on the right and the container will be started. This container is accessible on port 8080. If everything went well, you’ll see:

You can test this by:

curl -i http://172.17.9.105:8080

(out)HTTP/1.1 200 OK

(out)X-Powered-By: Express

(out)Content-Type: text/html; charset=utf-8

(out)Content-Length: 12

(out)ETag: W/"c-8O9wgeFTmsAO9bdhtPsBsw"

(out)Date: Thu, 18 Feb 2016 11:04:35 GMT

(out)Connection: keep-alive

(out)

(out)Hello world

It will just return a simple “Hello world”.

The same can be achieved via the CLI. Connect to one of the nodes and run:

docker run -d -p 8081:8080 --name hello-world-cli-v1 muellermich/nodejs-hello

You’ll see the container running using:

docker ps

(out)CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

(out)13d9f6710e48 muellermich/nodejs-hello "npm start" 3 seconds ago Up 3 seconds 0.0.0.0:8081->8080/tcp hello-world-cli-v1

(out)6d69aa8e1a7d muellermich/nodejs-hello "npm start" 4 minutes ago Up 4 minutes 0.0.0.0:8080->8080/tcp hello-world-gui-v1

Or the GUI:

To verify that the application is running, access the container from outside by identifying the node and port that the container is running on.

Let's add a load balancer to the setup and service discovery so containers get automatically registered.

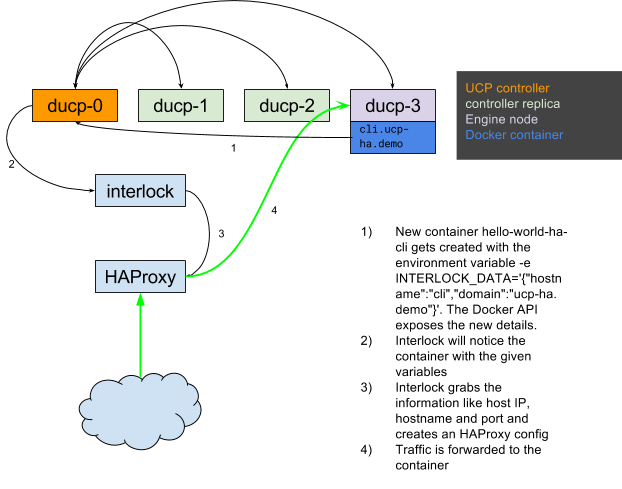

We will use HAProxy as the load balancer, also running in a container. For service discovery, we use Interlock from Docker employee Evan Hazlett. Interlock is described as “Dynamic, event-driven Docker plugin system using Swarm”. It makes use of the event stream of Docker Swarm and the Docker API. This allows interlock to listen for container events like starting and stopping and take action based on them.

It uses the Docker API to fetch some attributes about the started or stopped containers, such as service name, hostname, host IP and port. Based on this information, a new HAProxy configuration is deployed.

We'll use docker-compose to launch interlock on the UCP Cluster. That way, interlock will also show up as new application in UCP. The compose file looks like this:

version: "2"

services:

interlock:

image: ehazlett/interlock:latest

command: "--swarm-url tcp://172.17.9.102:2376 --swarm-tls-ca-cert /data/ca.pem --swarm-tls-cert /data/cert.pem --swarm-tls-key /data/key.pem --plugin haproxy start"

restart: always

environment:

- "affinity:container==ucp-swarm-manager"

ports:

- "80:80"

volumes:

- "/var/lib/docker/volumes/ucp-swarm-node-certs/_data:/data:ro"

On the master, we just have to issue:

docker-compose up -d

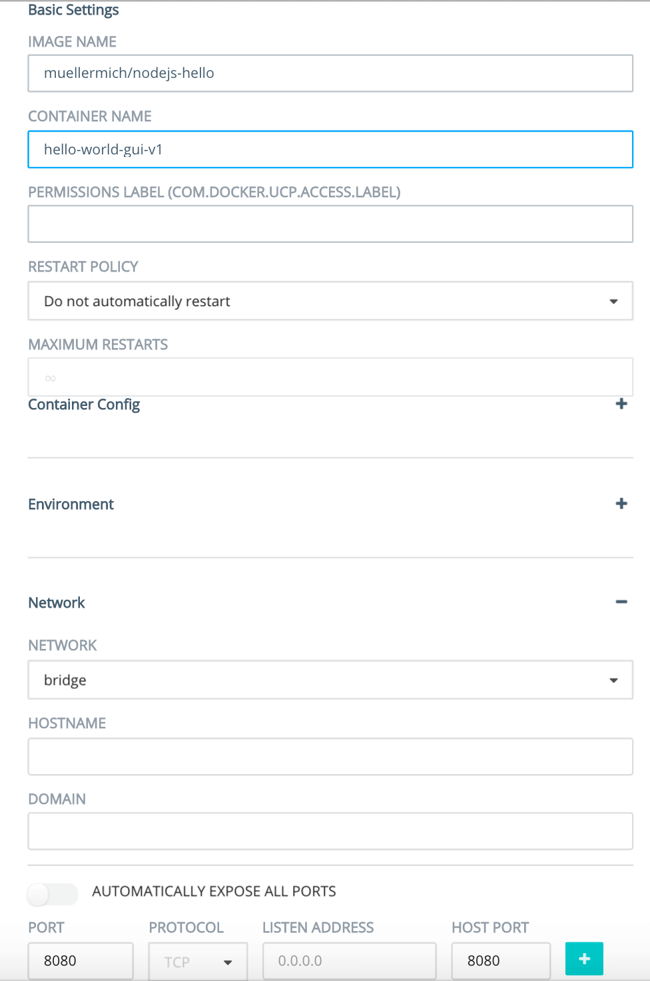

This will bring up a container with HAProxy running. You can access the stats page of HAProxy by accessing: `http://172.17.9.102/haproxy?stats`

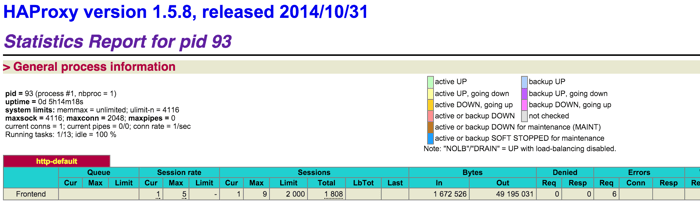

In the GUI under the application tab, you’ll now find the newly created application.

Now let's start a new container from the CLI and register this container with our newly created HAProxy:

docker run -d -P -e INTERLOCK_DATA='{"hostname":"cli","domain":"ucp-ha.demo"}' --name hello-world-ha-cli muellermich/nodejs-hello

`-P` publishes all exposed ports inside the container to random ports on the host. We also pass an environment variable `-e INTERLOCK_DATA='{"hostname":"cli","domain":"ucp-ha.demo"}'` to tell interlock that this container will have the URL `http://cli.ucp-ha.demo/`.

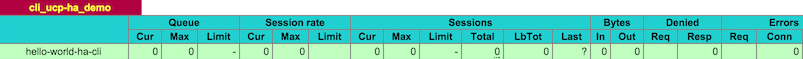

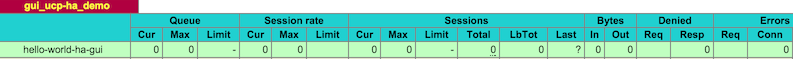

The HAProxy config is automatically recreated, which you can see on the status page of HAProxy:

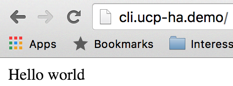

The application inside the container we just launched can be access via the browser or via curl. It will just prompt “Hello World”:

Below you can see how interlock works and recreates the HAProxy configuration.

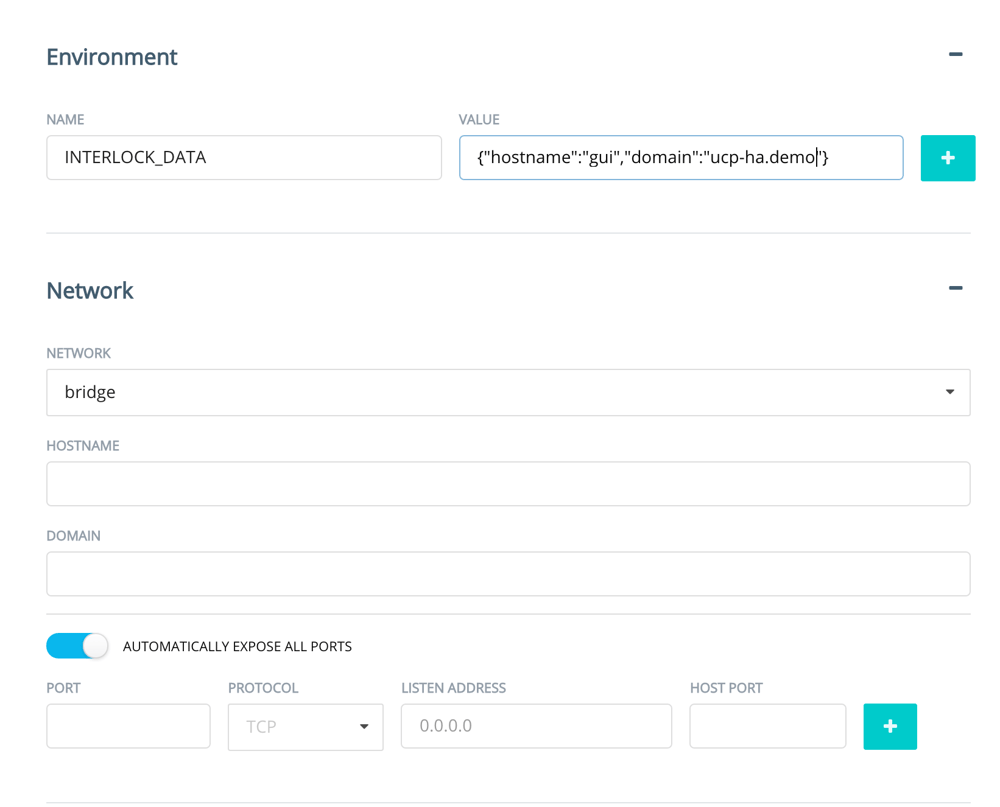

The same can be achieved via the GUI: You need to enter the container details like above, add the environment variable for interlock and select to automatically expose all ports.

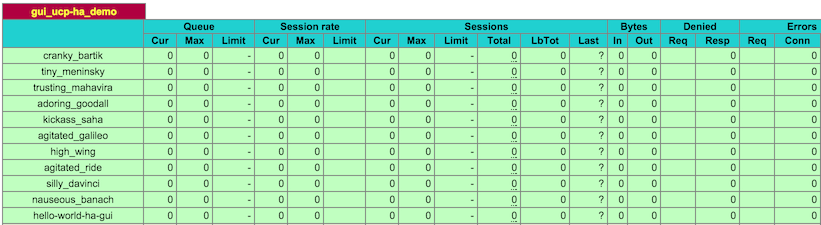

This again will generate the corresponding HAProxy config

You can now easily scale your application up using the GUI. Navigate to your container and click on the sliders icon. Then navigate to the right top to “scale”. Enter the number you want to scale up e.g.: 10 and this will spin up 10 new containers and reconfigure HAProxy.

Closing thoughts

The setup of a cluster for Docker containers is simple and straightforward. With the addition of interlock, it’s a good starting point for a container infrastructure.

But there is still work to do: Rescheduling is missing even though there is now basic support for it in Docker swarm 1.1 (experimental). I couldn’t find a way to convince UCP to start Docker Swarm in experimental mode, but I’m pretty sure this will be added in one of the next releases.

If you want to know more about rescheduling in Docker Swarm, please read the article Rescheduling containers on node failures with Docker Swarm 1.1 by Maximilian Schoefmann.

Previous article

Previous article